Finding the partition number the data for our is in We need the partitions to be empty on this table ,SYSDATETIME() - CaptureDateTime - SyncBatchId - uniqueidentifier ,NEWID() - CaptureBatch - uniqueidentifier ,'Insert RawSource Here' - RawSource - varchar(200) ,'Insert Text Here' - Text - varchar(400)

,'Insert EntityName Here' - EntityName - varchar(255) ,'Insert Name Here' - IndividualName - varchar(255) Lets insert some data into the staging table (this can be your population from Transfer.CaptureSync)

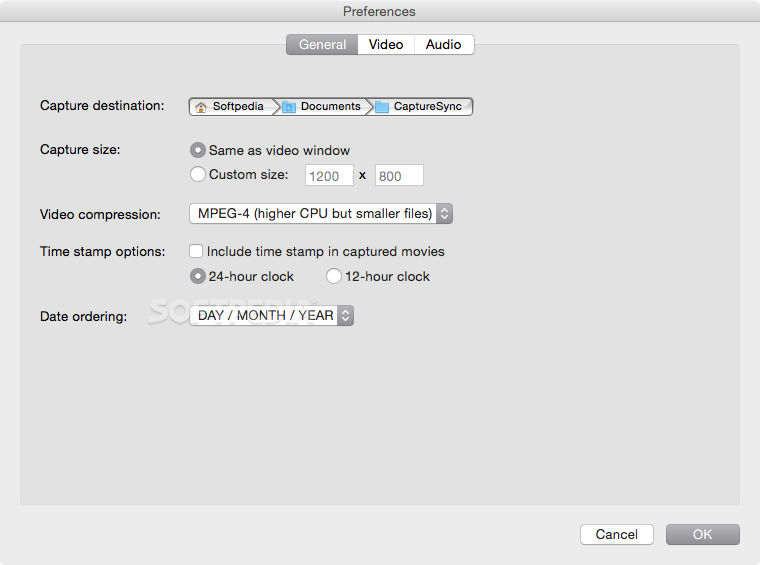

#Capturesync archive#

Creating an archive table with required partitions just so that we can easily transfer out of the data tableĬREATE PARTITION FUNCTION PF_ArchiveCapture ( INT )ĬREATE PARTITION SCHEME PS_ArchiveCaptureĬREATE CLUSTERED INDEX IXC_ArchiveCapture_TaskSourceID Creating the Staging Table with required partitionsĬREATE PARTITION FUNCTION PF_StagingCapture ( INT )ĬREATE PARTITION SCHEME PS_StagingCaptureĬREATE CLUSTERED INDEX IXC_StagingCapture_TaskSourceID TO (, ,, ,, , ) ĬREATE CLUSTERED INDEX IXC_DataCapture_TaskSourceID

Creating the Data Table with required partitionsĬREATE PARTITION FUNCTION PF_DataCapture ( INT )ĪS RANGE RIGHT FOR VALUES ( 1, 2, 3, 4, 5 ) IF EXISTS ( SELECT * FROM sys.databases WHERE name = 'PlayGround' )ĪLTER DATABASE SET SINGLE_USER WITH ROLLBACK IMMEDIATE The staging of data should not take much time as all you would be doing is copying the data, without making any specific updates or deletes.Īs discussed a sample for partitioning (make sure you do not do this on production or have any important database called "Playground") :) : USE If not you could have a staging table which is partitioned, with the structure same as the Data.Capture Table. This is assuming that the tables are the same structure. Putting the partition switching as a transaction, the transfer process should be reduced to a few seconds rather than hours while maintaining data integrity If you partition the Transfer.CaptureSync table and Data.Capture table by TaskSourceId, you should be able to switch in and switch out the partitions. Have you looked at partitioning the tables?

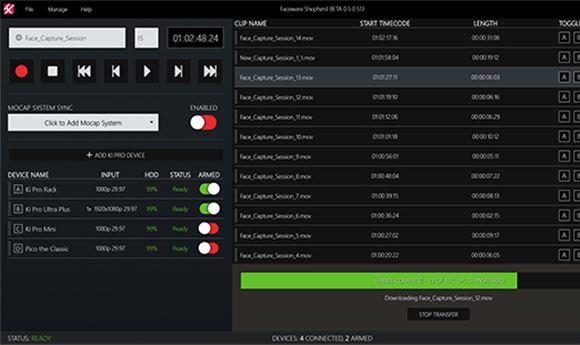

I am looking to SQL experts, which I am not, for input. Is this the most efficient and optimal approach to achieve this objective? I realize "most efficient and optimal" is somewhat subjective. This procedure takes on average 10-12 hours a day to run, using precious resources for a very long time. Given all this information, we return to the problem at hand. The Data.Capture table is approximately 4 million records, and Transfer.CaptureSync table takes in approximately 3 million records per day. If a group of records for a given TaskSourceId does not exist in Transfer.CaptureSync, leave the last transferred set of records related to that TaskSourceId in Data.Capture untouched.Īfter the process is completed each day, the Transfer.CaptureSync table is truncated. Replace information in Data.Capture by TaskSourceId with information in Transfer.CaptureSync. Grab latest information from a website and write to Transfer.CaptureSync I hope this explanation makes sense - to recap: So when we don't have new records for a given TaskSourceId on a given day, we just want to leave the latest ones alone that are already in Data.Capture. However, not all TaskSourceId's have new records every day, and which ones do, can differ from day to day. The purpose of this stored procedure is to then go into the records of Transfer.CaptureSync, and one TaskSourceId at a time, replace the group of records in Data.Capture related to that TaskSourceId, so we always have the latest information in Data.Capture for a given TaskSourceId. Once every 24 hours, web scrapers write data to the Transfer.CaptureSync table, which acts as a holding table. One TaskSourceId has many records coming from the same web source. The records in Data.Capture are grouped by TaskSourceId. This table is refreshed once every 24 hours (via the above stored procedure) to create a dataset of the latest information. In a nutshell: Data.Capture is our production table which contains all of our latest records. WHERE TaskSourceId = SyncBatchId != get the next sourceįETCH NEXT FROM curSources INTO - end of the cursor WHERE TaskSourceId = SyncBatchId = remove the existing rows WHERE TaskSourceId = flag the new rows as active insert the rows into the capture table, but do not flag as active Execution of this procedure is currently taking on average 10-12 hours a day to run. We run this procedure once every 24 hours. Azure SQL Server 2016 w/ Azure SQL Database:

0 kommentar(er)

0 kommentar(er)